https://data-flair.training/blogs/python-ai-tutorial/

A Novel Algorithm Enables Statistical Analysis of Time Series Data

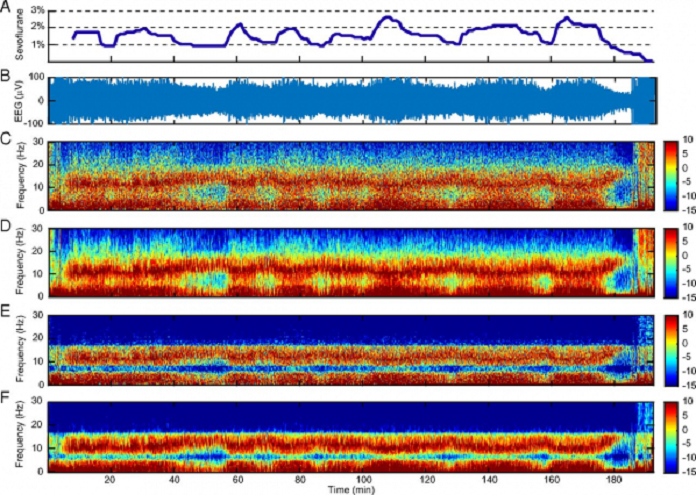

MIT scientists have developed a novel approach to analyzing time series data sets using a new algorithm, termed state-space multitaper time-frequency analysis (SS-MT). SS-MT gives a structure to dissect time arrangement information progressively, empowering analysts to work in a more educated manner with extensive arrangements of information that are nonstationary, i.e. at the point when their qualities develop after some time.

Using a novel analytical method they have developed, MIT researchers analyzed raw brain activity data (B). The spectrogram shows decreased noise and increased frequency resolution, or contrast (E and F) compared to standard spectral analysis methods (C and D). Image courtesy of Seong-Eun Kim et al.

It is important to measure time while every task such as tracking brain activity in the operating room, seismic vibrations during an earthquake, or biodiversity in a single ecosystem over a million years. Measuring the recurrence of an event over some stretch of time is a major information investigation errand that yields basic knowledge in numerous logical fields.

This newly developed approach enables analysts to measure the moving properties of information as well as make formal factual correlations between discretionary sections of the information.

Emery Brown, the Edward Hood Taplin Professor of Medical Engineering and Computational Neuroscience said, “The algorithm functions similarly to the way a GPS calculates your route when driving. If you stray away from your predicted route, the GPS triggers the recalculation to incorporate the new information.”

“This allows you to use what you have already computed to get a more accurate estimate of what you’re about to calculate in the next time period. Current approaches to analyses of long, nonstationary time series ignore what you have already calculated in the previous interval leading to an enormous information loss.” ……

Full post: https://www.techexplorist.com/novel-algorithm-enables-statistical-analysis-time-series-data/

Abstract: http://www.pnas.org/content/early/2017/12/15/1702877115

AutoML for large scale image classification and object detection

A few months ago, we introduced our AutoML project, an approach that automates the design of machine learning models. While we found that AutoML can design small neural networks that perform on par with neural networks designed by human experts, these results were constrained to small academic datasets like CIFAR-10, and Penn Treebank. We became curious how this method would perform on larger more challenging datasets, such as ImageNet image classification and COCO object detection. Many state-of-the-art machine learning architectures have been invented by humans to tackle these datasets in academic competitions.

In Learning Transferable Architectures for Scalable Image Recognition, we apply AutoML to the ImageNet image classification and COCO object detection dataset — two of the most respected large scale academic datasets in computer vision. These two datasets prove a great challenge for us because they are orders of magnitude larger than CIFAR-10 and Penn Treebank datasets. For instance, naively applying AutoML directly to ImageNet would require many months of training our method.

To be able to apply our method to ImageNet we have altered the AutoML approach to be more tractable to large-scale datasets:

- We redesigned the search space so that AutoML could find the best layer which can then be stacked many times in a flexible manner to create a final network.

- We performed architecture search on CIFAR-10 and transferred the best learned architecture to ImageNet image classification and COCO object detection.

With this method, AutoML was able to find the best layers that work well on CIFAR-10 but work well on ImageNet classification and COCO object detection. These two layers are combined to form a novel architecture, which we called “NASNet”.

|

| Our NASNet architecture is composed of two types of layers: Normal Layer (left), and Reduction Layer (right). These two layers are designed by AutoML. |

source: https://research.googleblog.com/2017/11/automl-for-large-scale-image.html

NVIDIA TensorRT

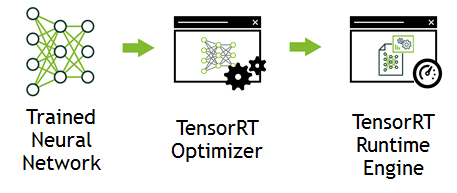

Deep Learning Inference Optimizer and Runtime Engine

NVIDIA TensorRT™ is a high-performance deep learning inference optimizer and runtime for deep learning applications. TensorRT can be used to rapidly optimize, validate and deploy trained neural network for inference to hyperscale data centers, embedded, or automotive product platforms.

Developers can use TensorRT to deliver fast inference using INT8 or FP16 optimized precision that significantly reduces latency, as demanded by real-time services such as streaming video categorization on the cloud or object detection and segmentation on embedded and automotive platforms. With TensorRT developers can focus on developing novel AI-powered applications rather than performance tuning for inference deployment. TensorRT runtime ensures optimal inference performance that can meet the needs of even the most demanding throughput requirements.

What’s New in TensorRT 2

TensorRT 2 is now available as a free download to the members of the NVIDIA Developer Program.

- Deliver up to 45x faster inference under 7 ms real-time latency with INT8 precision

- Integrate novel user defined layers as plugins using Custom Layer API

- Deploy sequence based models for image captioning, language translation and other applications using LSTM and GRU Recurrent Neural Networks (RNN) layers

TensorRT 3 for Volta GPUs- Interest List

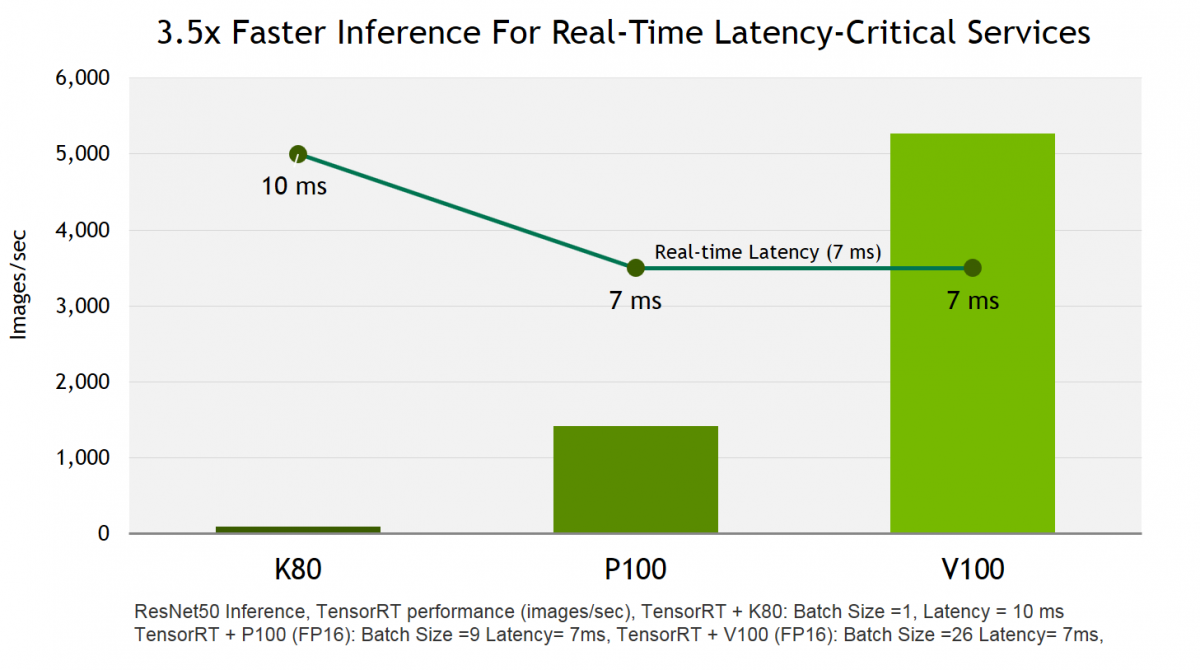

TensorRT 3 delivers 3.5x faster inference on Tesla V100, powered by Volta vs. Tesla P100. Developers can optimize models trained in TensorFlow or Caffe deep learning frameworks to generate runtime engines that maximizes inference throughput, making deep learning practical for latency-critical services in hyperscale datacenters, embedded, and automotive production environments.

With support for Linux, Microsoft Windows, BlackBerry QNX and Android operating systems developers can deploy AI-powered everywhere, from data centers to mobile, automotive and embedded edge devices.

Sign up below to be notified when TensorRT 3 becomes available.